Landscape of AI Computing

Artificial intelligence (AI) has permeated countless fields, powered by the advances in latest generative architectures, to a point where a form of artificial general intelligence (AGI) seems tantalizingly plausible in the near future. Years of virtuous cycle of co-design have also established transformers and GPUs as the unquestioned (and maybe a bit uncomfortable) de facto choice for cutting edge AI development. Yet, few realize that serving any generative AI model in an interactive application uses low single digit percentage of a modern GPU’s compute capabilities! Why then is the industry still paying huge premiums on GPU Infrastructure costs? A short history lesson might help but spoiler, it’s the software.

Programming Abstractions and “New” Normals

If you learnt programming in school, chances are that your first program was meant to run on a microprocessor (CPU) target. Today, for an AI program or model to run by default on a GPU, several things had to come together about a decade ago:

1. GPUs were already available in computing systems to accelerate…well…graphics. It was established over the years that GPUs provided enough benefits in that class of work (graphics) to merit a break from previously prevalent programming models for CPUs

2. Persistent and meticulous seeding of research labs by Nvidia had exposed a crop of engineers to the CUDA programming model and how to express non-graphics workloads, such as AI, to run on GPU programmable shaders

3. Matrix multiplications, key building blocks of the leading neural network architectures, mapped incredibly well to the massively parallel execution environment in the GPUs

As the AI SW ecosystem has matured with the development of powerful AI frameworks, middleware, optimization libraries, and distributed software, they are still built on top of the fundamental tenets of GPU programming — thread-block based parallelism, unified shared memory, and work delivered via dispatch of kernels.

It is an uphill climb for a promising new compute architecture to differentiate with sufficient value if it is also burdened with low level compatibility — as it was the case for GPUs when they first appeared to accelerate graphics. But after a period of perturbance, a new normal was established where graphics programs were written for GPUs. Is the computing industry at a point where a new normal of AI device abstraction is needed to truly deliver the performance jump it needs?

Work Ahead and What We are Doing

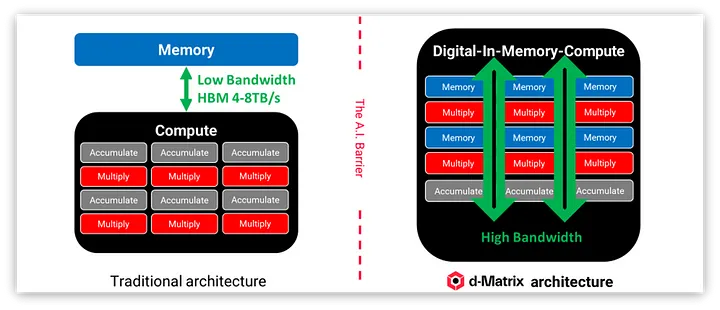

At d-Matrix, we are excited to be part of this era of AI technology and helping push the frontier of computing systems. Generative AI workloads are unique in that the prompt or prefill phase is compute-bound, while the token generation phase is memory-bound. We have been working on a variety of innovations in pursuit of the next new normal:

1. A novel in-memory computing-based hardware architecture that caters to the extremely high memory bandwidth needs of generative AI

2. Scaling from chiplets to clusters over a standard fabric

3. Tooling for efficient model optimization that leverages novel datatypes including MX. Our tools (DMX Compressor) were presented at the recently concluded PyTorch Conference and are available in the open source

4. Prototype backend in PyTorch for a dataflow architecture that utilizes the graph compilation support of Torch Dynamo to deliver work to the hardware while allowing graph breaks and debug hooks that users like

5. Programming models, DSLs, and IRs that deviate from GPU-like thread blocks but are more expressive for spatial architectures and devices with multi-level memory hierarchies

6. Primitives for efficient networking communication between devices and methods for achieving those with minimal synchronization between accelerators and hosts

Software Building Blocks for a New Computing Paradigm

Multiple custom chip solutions have demonstrated the value of novel architectures when not constrained by the burden of legacy. There are community efforts underway to make the software ecosystem more open to non-traditional designs. Notable examples include:

1. Torch Compile: Although vast majority of PyTorch programs run in imperative (“eager”) mode where each operator executes independently, torch.compile enables large parts of an AI model to be compiled and executed as one dispatch — something very desirable for dataflow architectures. Compile is a natural extension of the tracing and exporting facility in PyTorch which now allows the compiled models to execute within the PyTorch environment — opening the door to richer applications and complex control flows

2. MX Datatypes: After many iterations of vendor-defined datatypes (e.g. bFloat16 and variants of FP8), the Open Compute Project (OCP) has helped standardize micro-scaling datatypes (MX) which are immensely valuable for tensor representation in AI. It allows chip designers to leverage low precision computing and storage for higher efficiency, while also being interoperable with compatible external solutions

3. Triton DSL: In order to facilitate non-CUDA GPUs (or custom accelerators) to execute optimized kernel programs, the Triton domain specific language (DSL) provides a HW-agnostic device abstraction that can be readily mapped to CUDA but also lowered to other devices via a compilable intermediate representation (IR). Triton-native kernels promise portability between different accelerators without source modification which removes a huge friction point for existing users

While these efforts to make the computing ecosystem more accessible are commendable, there is more work to be done. To unlock a new era of AI compute solutions with innovative memory-compute integration and accelerate memory-bound GenAI workloads, it is critical to enable new software abstractions as well. Many of these and more require collaboration across companies, standards, and community participants and we look forward to working with them on these ambitious objectives.